Stereo Reconstruction

Home

About Us

People

Teaching

Research

Publications

Awards

Links

Contact

Internal

The goal of dense stereo vision is to reconstruct realistic 3D models from two or more stereo images. In its most elementary setup, stereo vision is closely related to the optical flow problem between two images. Compared to pure optical flow, the motion of the pixels in a stereo setting is additionally restricted by the geometry of the image pair, the so called epipolar geometry. The epipolar geometry is determined by the relative position of the two cameras and by the internal camera parameters, such as the focal length. The traditional stereo reconstruction pipeline consists of two main stages: camera calibration and dense matching. Camera calibration is the retrieval of the external camera pose and orientation as well as the internal parameters and is typically performed with the help of sparse image features. Establishing dense correspondences allows for a full 3D reconstruction and is generally done by global discrete or continuous optimisation on the rectified images. Knowledge about the epipolar geometry facilitates the search for correspondences between the images considerably. Conversely, the estimation of the epipolar geometry can benefit from a large amount of accurate pixel correspondences, such as provided by optical flow methods.

We have made contributions to the fully and the partially calibrated stereo setting. For the fully calibrated setting, it is assumed that the cameras are either completely calibrated, or that the epipolar geometry of the image pair is known a priory. In such case, the image correspondence problem reduces to a one dimensional search along the known epipolar lines. In the partially calibrated case, only the intrinsic camera parameters are given, but nothing is further known about the epipolar geometry of the stereo pair. In this case, both the camera geometry (pose and orientation) and the dense set image correspondences have to be estimated before a 3D reconstruction of the scene can be performed.

Fully Calibrated Stereo

-

Optical Flow Goes Stereo

In [1] a variational approach for dense stereo vision was presented. It exploits the similarity between optical flow and fully calibrated stereo by integrating the epipolar constraint as a hard constraint. This enforces corresponding pixels to lie on epipolar lines. Adding successful concepts from the accurate optical flow method of Brox et al., we could further improve the quality of results [2]. These concepts encompass the use of robust penaliser functions and the gradient constancy assumption, yielding a method that is robust under varying illumination and noise.

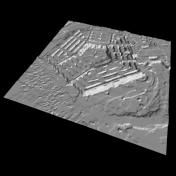

(a) Left view (b) Right view (c) Reconstruction

The above figure shows an aerial view of the Pentagon building (available at the CMU VASC Image Database). The two images form a stereo pair with known epipolar geometry. The left image is depicted in Fig. (a) and the right image in Fig. (b). A 3D reconstruction obtained from these two images is shown in Fig. (c). -

Robust Multi-View Reconstruction

In order to improve the quality of stereo reconstruction results one can process more than two images of the same scene. In [3] we presented a variational multi-view stereo approach for small baseline distances. -

A Novel Anisotropic Disparity-Driven Regularisation Strategy

The smoothness terms used in the previous variational stereo methods suffer from at least one of the following drawbacks: Either an isotropic disparity-driven smoothness term is used that ignores the directional information of the disparity field, or an anisotropic image-driven regulariser is applied that suffers from oversegmentation artifacts. In [6] we proposed a novel anisotropic disparity-driven smoothness strategy within a PDE-based framework. It remedies the mentioned drawbacks and allows to improve the quality of disparity estimation. We also showed that an adaptation of the anisotropic flow-driven optical flow smoothness term does not work for the stereo case.

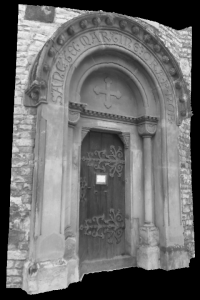

(a) Left view (b) Right view (c) Disparity

(d) Reconstruction (e) Reconstruction with texture

This figure shows in (a) and (b) a stereo pair depicting the portal of a church (available from the Technical University of Prague). The greycoded disparity in (c) was computed with our method from [6]. We visualise the disparity as a heightfield in (d), which gives an impression of the quality of our results. In (e) we show a texture-mapped version of the heightfield. -

Sophisticated Numerics: An Adaptive Derivative Discretisation Scheme

The implementation of variational approaches requires to discretise occurring derivatives. Previous works use "standard" central finite difference approximations to this end. Adopting a successful concept from the theory of hyperbolic partial differential equations, we presented in [7] a sophisticated adaptive derivative discretisation scheme. It blends central and correctly oriented one-sided (upwind) differences based on a smoothness measure. We found that such a procedure can improve the results for variational approaches to stereo reconstruction and optical flow in the same manner as model refinements.

Partially Calibrated Stereo

-

Estimation of the Fundamental Matrix from Optical Flow

The fundamental matrix competely describes the epipolar geometry of a pair of stereo images without the need for fully calibrated cameras. In practice, the fundamental matrix is mostly estimated by matching salient image features such as SIFT points or KLT features and then minimising a cost function over the obtained sparse set of correspondences. In [4] and [8] we investigate to which extend dense correspondences resulting from optical flow computation can be used to estimate the fundamental matrix from a pair of uncalibrated images. It turns out that the large amount of matches provided by variational optical flow methods and the absence of gross outliers therein make it possible to obtain accurate estimates using a robust least-squares fit. We further show that these results are competitive with state-of-the-art feature based methods that make use of outlier rejection, robust statistics and advances error meassures. -

Joint Estimation of Correspondences and Fundamental Matrix

In [5] and [8] we integrate the epipolar constraint as a soft constraint into the optical flow methods of Brox et al. and Zimmer et al.. This provides a coupling between the unknown optical flow (scene structure) and the unknown epipolar geometry (camera motion). The advantage of a joint estimation of both unknowns is that the optical flow is not only used to estimate the fundamental matrix, but that knowledge about the epipolar geometry is at the same time fed back into the correspondence estimation. This way we estimate a scene structure that is most consistent with the camera motion. We demonstrate that this joint method not only improves the estimation of the fundamental matrix, but also yields better optical flow results then an approach without epipolar geometry estimation. Since it is often realistic to assume that the internal camera parameters are known, the relative camera pose and orientation can be extracted from the fundamental matrix. Our method thereby combines the two main stages of traditional 3D reconstruction into one optimisation framework: the recovery of the camera matrices and the scene surface.

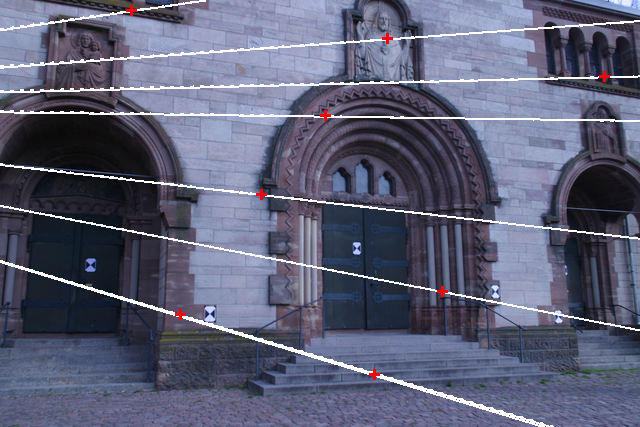

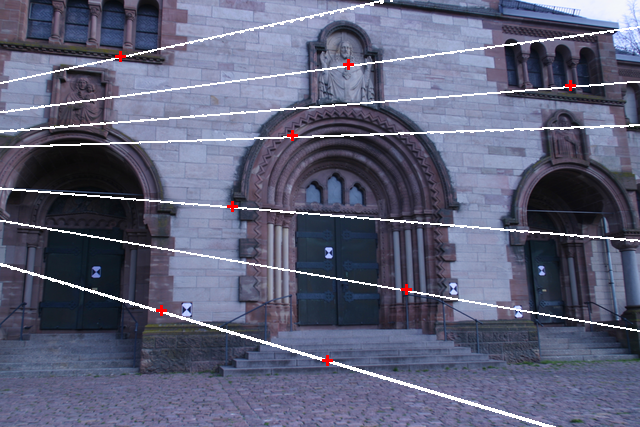

(a) Left view with estimated epipolar lines (b) Right view with estimated epipolar lines

(c) Untextured reconstruction

This figure shows in (a) and (b) a stereo pair depicting two images from the Herz-Jesu data set (available from the EPFL in Lausanne). The epipolar geoemetry, depicted by the epipolar lines, was computed with our joint method from [5] and [8]. In (c) we show an untextured surface reconstruction obtained from the optical flow and the extracted camera matrices.

-

L. Alvarez, R. Deriche, J. Sánchez, J. Weickert:

Dense disparity map estimation respecting image derivatives: a PDE and scale-space based approach.

Journal of Visual Communication and Image Representation, Vol. 13, No. 1/2, 3-21, March/June 2002.

Revised version of Technical Report No. 3874, ROBOTVIS, INRIA Sophia-Antipolis, France, January 2000.

[Demo]

-

N. Slesareva, A. Bruhn, J. Weickert:

Optical flow goes stereo: A variational method for estimating discontinuity-preserving dense disparity maps.

In W. Kropatsch, R. Sablatnig, A. Hanbury (Eds.): Pattern Recognition. Lecture Notes in Computer Science, Vol. 3663, 33-40, Springer, Berlin, 2005.

Awarded a DAGM 2005 Paper Prize.

-

N. Slesareva, T. Bühler, K. Hagenburg, J. Weickert, A. Bruhn, Z. Karni,

H.-P. Seidel:

Robust variational reconstruction from multiple views.

In B.K. Esbøll, K.S. Pedersen(Eds.): Image Analysis.

Lecture Notes in Computer Science, Vol. 4522, 173-182, Springer, Berlin, 2007. -

M. Mainberger, A. Bruhn, J. Weickert:

Is dense optic flow useful to compute the fundamental matrix?

Updated version with errata. In A. Campilho, M. Kamel (Eds.): Image Analysis and Recognition. Lecture Notes in Computer Science, Vol. 5112, 630-639, Springer, Berlin, 2008.

-

L. Valgaerts, A. Bruhn, J. Weickert:

A variational approach for the joint recovery of the fundamental matrix and the optical flow.

In G. Rigoll (Ed.): Pattern Recognition. Lecture Notes in Computer Science, Vol. 5096, 314-324, Springer, Berlin, 2008.

-

H. Zimmer, A. Bruhn, L. Valgaerts, M. Breuß, J. Weickert, B. Rosenhahn,

H.-P. Seidel:

PDE-based anisotropic disparity-driven stereo vision.

In O. Deussen, D. Keim, D. Saupe (Eds.): Vision, Modeling, and Visualization 2008. AKA Heidelberg, 263-272, October 2008. -

H. Zimmer, M. Breuß, J. Weickert, H.-P. Seidel:

Hyperbolic numerics for variational approaches to correspondence problems.

In X.-C. Tai et al. (Eds.): Scale-Space and Variational Methods in Computer Vision. Lecture Notes in Computer Science, Vol. 5567, 636-647, Springer, Berlin, 2009.

-

L. Valgaerts, A. Bruhn, M. Mainberger, J. Weickert:

Dense versus Sparse Approaches for Estimating the Fundamental Matrix.

International Journal of Computer Vision (IJCV), 2011. Accepted for publication.

Revised version of Technical Report No. 263, Department of Mathematics,

Saarland University, Saarbrücken, Germany, 2010

MIA Group

©2001-2023

The author is not

responsible for

the content of

external pages.

Imprint -

Data protection