Seminar

Machine Learning for Image Analysis

Summer Term 2018

Home

About Us

People

Teaching

Research

Publications

Awards

Links

Contact

Internal

(Main) Seminar: Machine Learning for Image Analysis

Leif Bergerhoff,

Prof. Joachim Weickert

Summer Term 2018

(Main) Seminar (2 h)

Notice for bachelor/master students of mathematics: This is a »Hauptseminar« in the sense of these study programs.

|

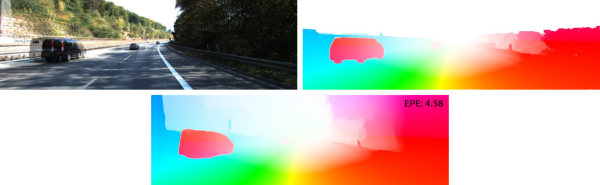

| Ilg et al., FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks. |

NEWS:

05/30/2018: The write-up guidelines are online.

02/01/2018: The list of assigned topics is online.

02/01/2018: The slides of the introductory meeting are online.

01/30/2018: Registration for the seminar is closed. There are no more places left.

01/23/2018:

There are no more places left. You can still register and we put you on

the waiting list (in case someone drops the seminar again). Therefore, we ask you to come to the introductory meeting, too.

01/23/2018: Registration for the seminar is open. (see below)

01/18/2018: Registration for the seminar is possible from Tuesday, January 23, 2018, 12 noon.

Important Dates – Description – Registration – Requirements – Introductory Meeting – Write-up – Overview of Topics – Supplementary Material – Literature

Introductory meeting (mandatory):

The introductory meeting will take place in building E1.7, room 4.10 on Wednesday, January 31,

2018, 4:15 p.m..

In this meeting, we will assign the topics to the participants.

Attendance is mandatory for all participants. Do not forget to register

first (see below).

Regular meetings during the summer term 2018:

Building E1.7, room 4.10 on Wednesdays, 4:15 p.m.

Contents: The topic of machine learning has received increasing attention over the last few years and became an inherent part of many methods developed in the area of image processing and computer vision. Based on the book by Goodfellow et al., this seminar aims to provide an overview of related machine learning techniques whereas the focus lies on deep networks. The application of the latter to the field of image analysis will be discussed by means of recent research papers.

Prerequisites: The seminar is for advanced bachelor or master students in Visual Computing, Mathematics, or Computer Science. Basic knowledge of linear algebra, probability theory, and numerics is required. Elementary knowledge in machine learning and image analysis is helpful but not necessary.

Language: The book by Goodfellow et al. and all papers are written in English, and English is the language of presentation.

Since the number of talks is limited, we ask for your understanding that participants will be considered strictly in the order of registration – no exceptions.

There are no more places left. The registration is closed.

Regular attendance: You must attend all seminar meetings, except for provable important reasons (medical certificate).

Talk:

Talk duration is 30 min, plus 15 min for discussion.

Please do not deviate from this time schedule.

You may give a presentation using a data projector,

overhead projector or blackboard, or mix these media appropriately.

Your presentation must be delivered in English. Your slides and your

write-up, too, have to be in English.

Opponent: Besides the main subject everyone gets assigned two more topics for which he or she takes over the role of an (active) opponent. This includes the preparation of meaningful questions as well as chairing the discussion after the corresponding presentation.

Write-up: The write-up has to be handed in three weeks after the lecture period ends. The deadline is Friday, August 10, 2018, 23:59. The write-up should summarise your talk and has to consist of 5 pages per speaker. Electronic submission is preferred. File format for electronic submissions is PDF – text processor files (like .doc) are not acceptable. Do not forget to hand in your write-up: Participants who do not submit a write-up cannot obtain the certificate for the seminar.

Plagiarism: Adhere to the standards of scientific referencing and avoid plagiarism: Quotations and copied material (such as images) must be clearly marked as such, and a bibliography is required. Otherwise the seminar counts as failed.

Mandatory consultation: Talk preparation has to be presented to your seminar supervisor no later than one week before the talk is given. It is your responsibility to approach me timely and make your appointment.

No-shows: No-shows are unfair to your fellow students: Some talks are based on previous talks, and your seminar place might have prevented the participation of another student. Thus, in case you do not appear to your scheduled talk (except for reasons beyond your control), we reserve the right to exclude you from future seminars of our group.

Participation in discussions: The discussions after the presentations are a vital part of this seminar. This means that the audience (i.e. all participants) poses questions and tries to find positive and negative aspects of the proposed idea. This participation is part of your final grade.

Being in time: To avoid disturbing or interrupting the speaker, all participants have to be in the seminar room in time. Participants that turn out to be regularly late must expect a negative influence on their grade.

Here are the slides from the introductory meeting. They contain important information for preparing a good talk.

The subsequently linked document provides guidelines for the creation of your write-up. Make sure to consider them during the preparation of your final report.

We will discuss the following book chapters and research papers. If your registration was successful, the password for access will be sent to you before the first meeting.

| No. | Date | Speaker | Opponents | Topic |

| 1 | 02/05 | Shubhendu Jena Slides |

Youmna Ismaeil, Usama Bin Aslam |

Machine Learning Basics (part 1) I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 5.1-5.4 |

| 2 | 02/05 | Vikramjit Sidhu Slides |

Yue Fan, Garvita Tiwari |

Machine Learning Basics (part 2) I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 5.5-5.11 |

| 3 | 09/05 | Subhabrata Choudhury Slides |

Dawei Zhu, Muhammad Moiz Sakha |

Deep Feedforward Networks I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 6 |

| 4 | 09/05 | Osman Ali Mian Slides |

Anilkumar Erappanakoppal Swamy | Regularisation for Deep Learning I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 7 |

| 5 | 16/05 | Mohamad Aymen Mir Slides |

Viktor Daropoulos, Sahar Abdelnabi |

Optimisation for Training Deep Models I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 8 |

| 6 | 16/05 | Vedika Agarwal Slides |

Tim Bruxmeier, Frederic Hoffmann |

Convolutional Networks I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 9 |

| 7 | 23/05 | Youmna Ismaeil Slides |

Shubhendu Jena, Usama Bin Aslam |

Sequence modeling: Recurrent and Recursive Nets I. Goodfellow, Y. Bengio and A. Courville: Deep Learning Chapter 10 |

| 8 | 23/05 | Yue Fan Slides |

Vikramjit Sidhu, Garvita Tiwari |

Why and When Can Deep - but Not Shallow - Networks Avoid the Curse of Dimensionality: a Review T. Poggio, H. Mhaskar, L. Rosasco, B. Miranda, and Q. Liao |

| 9 | 30/05 | Dawei Zhu Slides |

Subhabrata Choudhury, Muhammad Moiz Sakha |

Why does deep and cheap learning work so well? H. Lin and M. Tegmark |

| 10 | 30/05 | Anilkumar Erappanakoppal Swamy Slides |

Osman Ali Mian |

Visualizing and Understanding Convolutional Networks M. Zeiler and R. Fergus |

| 11 | 06/06 | Viktor Daropoulos Slides |

Mohamad Aymen Mir, Sahar Abdelnabi |

Variational Networks: Connecting Variational Methods and Deep Learning E. Kobler, T. Klatzer, K. Hammernik, and T. Pock |

| 12 | 06/06 | Tim Bruxmeier Slides |

Vedika Agarwal, Frederic Hoffmann |

End-to-End Learning for Image Burst Deblurring P. Wieschollek, B. Schölkopf, H. Lensch, and M. Hirsch |

| 13 | 13/06 | Usama Bin Aslam Slides |

Shubhendu Jena, Youmna Ismaeil |

Deep Discrete Flow F. Güney and A. Geiger |

| 14 | 13/06 | Garvita Tiwari Slides |

Vikramjit Sidhu, Yue Fan |

FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks E. Ilg, N. Mayer, T. Saikia, M. Keuper, A. Dosovitskiy, and T. Brox |

| 15 | 20/06 | Muhammad Moiz Sakha Slides |

Subhabrata Choudhury, Dawei Zhu |

Deep Watershed Transform for Instance Segmentation M. Bai and R. Urtasun |

| 16 | 20/06 | Sahar Abdelnabi Slides |

Mohamad Aymen Mir, Viktor Daropoulos |

CLKN: Cascaded Lucas-Kanade Networks for Image Alignment C.-H. Chang, C.-N. Chou, and E. Chang |

| 17 | 27/06 | NO TALK |

Osman Ali Mian, Anilkumar Erappanakoppal Swamy |

Non-local Color Image Denoising with Convolutional Neural Networks S. Lefkimmiatis |

| 18 | 27/06 | Frederic Hoffmann Slides |

Vedika Agarwal, Tim Bruxmeier |

Noise-Blind Image Deblurring M. Jin, S. Roth, and P. Favaro |

- Discussions and reading group videos covering the book by Goodfellow et al.,

- Reading group covering the book by Goodfellow et al.

-

M. Bai and R. Urtasun:

Deep Watershed Transform for Instance Segmentation.

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July, 2017. -

C.-H. Chang, C.-N. Chou, and E. Chang:

CLKN: Cascaded Lucas-Kanade Networks for Image Alignment.

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July, 2017. -

I. Goodfellow, Y. Bengio and A. Courville:

Deep Learning. Adaptive computation and machine learning.

MIT Press, 2016.

Online version -

F. Güney and A. Geiger:

Deep Discrete Flow.

Asian Conference on Computer Vision (ACCV), November, 2016. -

E. Ilg, N. Mayer, T. Saikia, M. Keuper, A. Dosovitskiy, and T. Brox:

FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks.

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July, 2017. -

M. Jin, S. Roth, and P. Favaro:

Noise-Blind Image Deblurring.

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July, 2017. -

E. Kobler, T. Klatzer, K. Hammernik, and T. Pock:

Variational Networks: Connecting Variational Methods and Deep Learning.

German Conference on Pattern Recognition (GCPR), September, 2017. -

S. Lefkimmiatis:

Non-local Color Image Denoising with Convolutional Neural Networks.

The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July, 2017. -

H. Lin and M. Tegmark:

Why does deep and cheap learning work so well?

CoRR, abs/1608.08225, 2016. -

T. Poggio, H. Mhaskar, L. Rosasco, B. Miranda, and Q. Liao:

Why and When Can Deep - but Not Shallow - Networks Avoid the Curse of Dimensionality: a Review.

CoRR, abs/1611.00740, 2016 -

P. Wieschollek, B. Schölkopf, H. Lensch, and M. Hirsch:

End-to-End Learning for Image Burst Deblurring.

Asian Conference on Computer Vision (ACCV), November, 2016. -

M. Zeiler and R. Fergus:

Visualizing and Understanding Convolutional Networks.

European Conference on Computer Vision, September, 2014.

MIA Group

©2001-2023

The author is not

responsible for

the content of

external pages.

Imprint -

Data protection