Seminar

Deep Learning and Optimisation for Visual Computing

Summer Term 2020

Home

About Us

People

Teaching

Research

Publications

Awards

Links

Contact

Internal

(Main) Seminar: Deep Learning and Optimisation for Visual Computing

Jon Arnar Tomasson,

Karl Schrader,

Prof. Joachim Weickert

Winter Term 2020

(Main) Seminar (2 h)

Notice for bachelor/master students of mathematics: This is a »Hauptseminar« in the sense of these study programs.

|

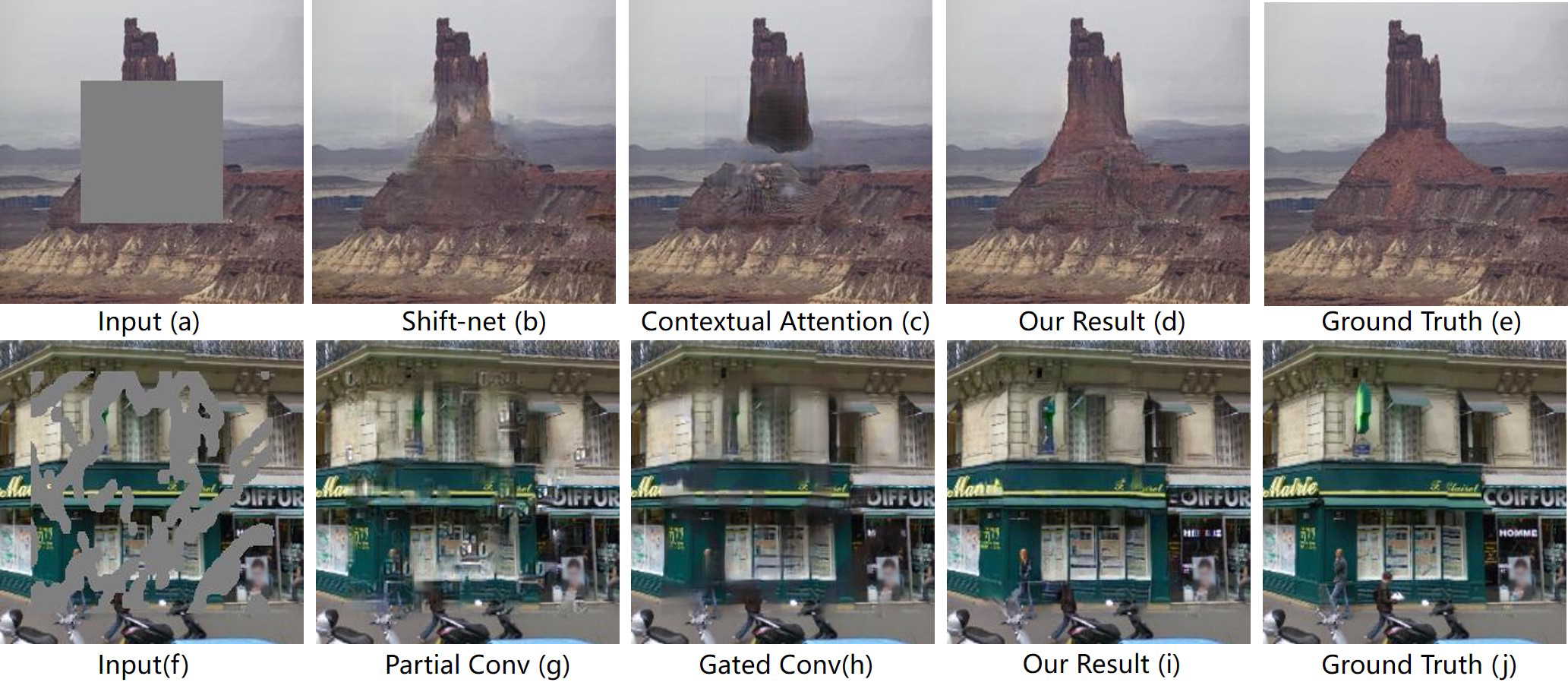

| Image inpainting. (Authors: Hongyu Liu, Bin Jiang, Yi Xiao, Chao Yang) |

NEWS:

October 28, 2020:

The registration is now closed.

October 21, 2020:

The registration is now open.

October 20, 2020:

The introductory meeting will take place

on Friday, October 30, 2020, 2:15 p.m., via Teams.

October 13, 2020:

The seminar will be completely virtual.

All students will be made members of a Teams Group. Communication will

commence there, important dates will still be posted here.

Important Dates – Description – Registration – Requirements – Introductory Meeting – Overview of Topics

Introductory meeting (mandatory):

The introductory meeting will take place

on Friday, October 30, 2020, 2:15 p.m., via Teams.

In this meeting, we will assign the topics to the participants.

Attendance is mandatory for all participants.

Do not forget to register first (see below).

Regular meetings during the winter term 2020:

Tuesday, 4:15 p.m., web meetings via Teams

Contents: Deep learning methods have recently been applied to produce many state of the art results for many different tasks in the field of computer vison. This includes image restoration tasks such as denoising, image inpainting, and deblurring and for computer vison tasks such as optic flow estimation and segmentation. In this seminar we will cover the basic concepts in the field of deep learning, along with recent state of the art methods, and how we can optimise theses networks.

Prerequisites: The seminar is for advanced bachelor or master students in Visual Computing, Mathematics, or Computer Science. Basic mathematical knowledge (e.g. Mathematik für Informatiker I-III) and some knowledge in image processing and computer vision is required.

Language: All papers are written in English, and English is the language of presentation.

The registration for this course is closed. You can see the order of the registrations here.

Regular attendance: You must attend all virtual seminar meetings. If you are sick, please send a medical certificate via mail to Jon Tomasson. If you have technical difficulties, let us know as soon as possible.

Talk: Your talk will consist of a 20min prerecorded video which is divided into 3-4 parts. A supervisor will stream them live during the virtual seminar meeting. After each part, there will be room for questions. After the talk, there will be a final discussion. Your presentation must be delivered in English. Your slides and your write-up, too, have to be in English. Send your video files in mp4 format and your presentation slides in pdf format to Jon Tomasson at least 24h before the seminar meeting.

Write-up: The write-up has to be handed in three weeks after the lecture period ends. The deadline is Friday, February 26, 23:59. The write-up should summarise your talk and has to consist of 5 pages per speaker. Please adhere to the guidelines for write-ups posted in our Teams group. Submit your write-up in pdf format directly in the corresponding assignment in Teams.

Plagiarism: Adhere to the standards of scientific referencing and avoid plagiarism: Quotations and copied material (such as images) must be clearly marked as such, and a bibliography is required. Otherwise the seminar counts as failed. See the write-up guidelines for a detailed explanation on how to cite correctly.

Mandatory consultation: Talk preparation (included a preliminary video and presentation slides) has to be presented to your seminar supervisor no later than one week before the talk is given. It is your responsibility to approach us timely and make your appointment for a video call.

No-shows: No-shows are unfair to your fellow students: Some talks are based on previous talks, and your seminar place might have prevented the participation of another student. Thus, in case you do not appear to your scheduled talk (except for reasons beyond your control), we reserve the right to exclude you from future seminars of our group.

Participation in discussions: The discussions after the presentations are a vital part of this seminar. This means that the audience (i.e. all paricipants) poses questions and tries to find positive and negative aspects of the proposed idea.

Being in time: To avoid interrupting the seminar, all participants have to be logged into the web meeting in time. Please make sure to log in early, in case there are technical difficulties. Participants that turn out to be regularly late must expect a negative influence on their grade.

Here are the slides from the introductory meeting. They contain important information for preparing a good talk.

MIA Group

©2001-2023

The author is not

responsible for

the content of

external pages.

Imprint -

Data protection